Do it! You know you want to. Mastering the Art of User Research.

As I have said before, taking notes is rife with danger. It’s so tempting to just write down everything that happens. But you probably can’t deal with all that data. First, it’s just too much. Second, it’s not organized. Let’s go back to the example from the last post. The research question was Do people make more errors on one version of the system than the other? And we chose these measures to find out the answer:

- Count of all incorrect selections (errors)

- Count and location of incorrect menu choices

- Count and location of incorrect buttons selected

- Count of errors of omission

- Count and location of visits to online help

- Number and percentage of tasks completed incorrectly

Here’s what I did. First, I listed the page (sometimes I put in a screen shot), with the correct action and then the possible (predicable, known) errors, like this:

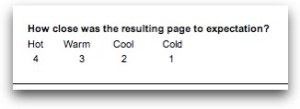

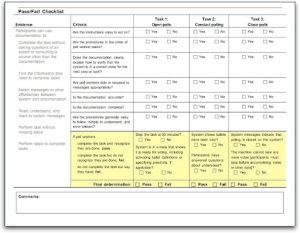

I hope you can see how to turn this into a pick list. You can just check these items off or number them if people do more than one of the errors. For my note taking forms, I added a choice for “Other” in case something happened that I hadn’t anticipated.  This is from a different study, but you get the idea: This is what your notes end up looking like. Even if you’re not doing statistical analyses, it’s easy to hold up these pages together across participants to see how many had problems and how severe the problems were.

This is from a different study, but you get the idea: This is what your notes end up looking like. Even if you’re not doing statistical analyses, it’s easy to hold up these pages together across participants to see how many had problems and how severe the problems were.

A common mistake newbies make when they’re conducting their first usability tests is taking verbatim notes.

Note taking for summative tests can be pretty straightforward. For those you should have benchmark data that you’re comparing against or at least clear success criteria. In that case, data collecting could (and probably should) be done mostly by the recording software (such as Morae). But for formative or exploratory tests, note taking can be more complex.

Why is it so tempting to write down everything?

Interesting things keep happening! Just last week I was the note taker for a summative test in which I noticed (after about 30 sessions), that women and men seemed to be holding the stylus for marking what we were testing differently and that it seemed that difference was causing a specific category of errors.

But the test wasn’t about using the hardware. This issue wasn’t something we had listed in our test plan as a measure. It was interesting, but not something we could investigate for this test. We will include it as an incidental observation in the report as something to research later.

Note-taking don’ts

- Don’t take notes yourself if you are moderating the session if you can help it.

- Don’t take verbatim notes. Ever. If you want that, record the sessions and get transcripts. (Or do what Steve Krug does, and listen to the recordings and re-dictate them into a speech recognition application.)

- Don’t take notes on anything that doesn’t line up with your research questions.

- Don’t take notes on anything that you aren’t going to report on (either because you don’t have time or it isn’t in the scope of the test).

Tips and tricks

- DO get observers to take notes. This is, in part, what observers are for. Give them specific things to look for. Some usability specialists like to get observer notes on large sticky notes, which is handy for the debriefing sessions.

- DO create pick lists, use screen shots, or draw trails. For example, for one study, I was trying to track a path through a web site to see if the IA worked. I printed out the first 3 levels of IA in nested lists in 2 columns so it fit on one page of a legal sized sheet of paper. Then I used colored highlighters to draw arrows from one topic label to the next as the participant moved through the site, numbering as I went. It was reasonably easy to transfer this data to Excel spreadsheets later to do further analysis.

- DO get participants to take notes for you. If the session is very formative, get the participants to mark up wireframes, screen flows, or other paper widgets to show where they had issues. For example, you might want to find out if a flow of screens matches the process a user typically follows. Start the session asking the participant to draw a boxes-and-arrows diagram of their process. At the end of the session, ask the participant to revise the diagram to a) get any refinements they may have forgotten, b) see gaps between their process and how the application works, or c) some variation or combination of a and b.

- DO think backward from the report. If you have written a test plan, you should be able to use that as a basis for the final report. What are you going to report on? (Hint: the answers to your research questions, using the measures you said you were going to collect.)